I feel like I need to look into those 100% ARM desktop systems with those Huawei video cards soon…

It’s an interesting subject, so I was dissapointed when it became clear that this text is written by AI. AI uses the following structure very often: “It’s not X, it’s Y”, so the list below tells me it’s AI beyond doubt.

I.

-

This is not speculation. This is not inference from supply chain tightness or price movements or anecdotal reports from frustrated procurement officers. This is the documented operational reality of…

-

This is not an analysis of a commodity market experiencing temporary tightness. This is a reconnaissance report from the front lines of a new form of economic warfare

-

This is not the blunt instrument of a traditional export ban. This is a scalpel.

-

The pattern suggests not reactive retaliation but proactive strategy.

-

The restrictions announced in 2023, 2024, and 2025 are not isolated policy responses to specific trade disputes. They are nodes in an integrated campaign

-

Tungsten is not the final escalation. It is another proof of concept.

-

The question for Western policymakers and corporate strategists and institutional investors is not whether to take this seriously (…) The question is what to do about it

II.

-

This is not marketing rhetoric from mining promoters or special pleading from industry lobbyists. This is physics.

-

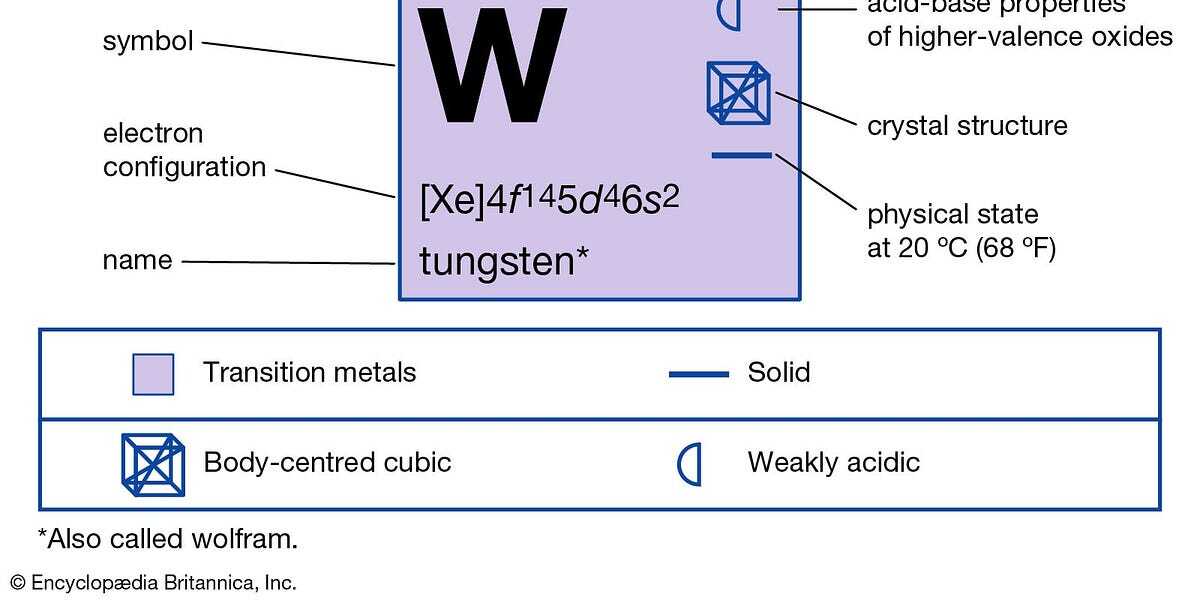

These properties are not arbitrary. They emerge from tungsten’s electronic structure

-

This is not an abstract supply chain concern. This is industrial capacity disappearing in real time

III.

- This is not a simple on-off switch. It is a tunable instrument with multiple control parameters

(Anything further is for paid subscribers.)

Well that’s annoying.

LLM> also dont use em dashes- It can be hard to know how much a writer relied on an LLM or how much revising/editing/proofreading they did before publishing, especially on topics you’re not versed in.

- These tells are going to get harder to clock very quickly.

- If you want your work to be taken seriously by people who understand the limitations of LLMs, then you should edit for tells (or don’t use one).

- People adopt conventions, and these LLMs are making pervasive the overuse of this phraseology. The influence is dialectical.

You might as well get used to it, LLMs are a tool that’s in wide use and it’s delusional to think that news sites will not use be using them. Personally, I absolutely do not care if the text was formatted by AI, as long as the content is factual.

The writing style is annoying, though, and essentially eliminates the authorial voice of every writer. Everyone is churning out the same slop, everything sounds the same, all difference is being eliminated. Even writers that don’t use the slop machines sound like this, because it’s all they read. It’s only going to get worse, the internet is fucking ruined.

I read these articles for the content, and I find news writing has been terrible long before LLMs. At least this way it’s written closer to just being a summary that you can scan through easily. You’ll be glad to know that people are working on stuff like this already, so LLM generated content is going to read very much like traditional human written content before long. https://muratcankoylan.com/projects/gertrude-stein-style-training/

Fine tuning costs money which means they aren’t going to do it. I fully expect they’ll settle for slop (they already have) and so will everyone else. You might as well get used to it. Everything gets worse forever and nothing ever gets better.

LoRA’s are actually really cheap and fast to make. That article I linked explains how it literally took 2 bucks to do. I don’t really think anything is getting worse forever. Things are just changing, and that’s one constant in the world.

And it was still something like 30% detectable as AI. That tells me that every article will still read as samey, even if it’s different enough to fool a tool that was trained on the current trends. Authorial voice is lost, replaced by the machine’s voice.

It was only when they trained on authors specifically, which cost $81, that it dropped down to 3%. They won’t do that.

Give it a year and we’ll see. These things are improving at an incredible pace, and costs continue to go down as well. Things you needed to have a data center to do just a year ago can now be done on a laptop.

It’s a pretty clear marker they don’t care enough about the text being factual though - they want an easy route. If the genAI was just used for proofing or some tweaks for readability then the signs of its use wouldn’t be so obvious.

But in this case - the article is close to unreadable. Most of the words could be deleted without losing information. It aims for things that sound impressive instead or giving a clear description of what will be discussed and what’s coming.

I’m impressed you’re trying to make the case that this is likely factual beyond some broad strokes statements that countries have issues guarding access to key imports in this case the US and tungsten. It seemed like the article had more to say but it was an unnecessarily tough read so I gave up

If you want to point out specific inaccuracies in the article then please go ahead and do that.

It was honestly too tiring to read. The main points were drowned in prose. Like the initial comment said, a lot of “this is not X” and that mostly means the sentence can be deleted

You know you can just not read an article and move on. But if you’re going to argue about accuracy of the article then you kinda have to at least show an example of it being inaccurate. The reality is that there’s been plenty terrible and hard to read articles written long before LLMs were a thing, and this one is far from the worst I’ve seen. It seems to me that you’re just bothered that an LLM was used to put the text together.

I didn’t argue about the accuracy of the article

I argued that use of genAI and leaving in tells was a indicator of the author not caring about accuracy and so the tells are, in my experience, an indication that any article is likely to be low quality information. This was in reply to someone saying that you may as well get used to LLMs

But… I do not, nor did I, express an opinion on if this was written by genAI. That’s error prone and I know I’m not skilled at it.

What I did express an opinion on was that the article is terribly written since I didn’t enjoy the parts I did read, they took time to get to the point and included a lot of sentences and words that could simply be deleted.

Are these all the authors alts or something?

And I’m saying that doesn’t follow at all. In fact, accuracy could be the only thing the author cares about, so he can read over and make sure there are no factual mistakes leaving the generated style as is. It’s honestly just so tiring having threads derailed by this endless perseveration people are doing over things being LLM generated. This is the world we live in now.

Also, it’s kinda weird to immediately claim that people disagreeing with you have to be alts or something. Like you really can’t conceive of your opinion not being dominant?

I’m probably not the sharpest tool in the shed, and my eyes are bothering me, and I didn’t struggle with it. We’re just used to really dumbed down news articles.

I enjoy reading textbooks and scientific articles then since Trump got into power I’ve read quite a few supreme court judgements so it’s not a comparison to simplified news articles

But this article contains

fentanylAI, run its gonna kill you

-

Reporter: [REDACTED]

Reason: It’s just an opinion piece / analysis. There either needs to be a tag for these or removalInvestigative journalism is just like your opinion, man.

🤣