- cross-posted to:

- technology@lemmy.ml

- cross-posted to:

- technology@lemmy.ml

To me this points to there being a lazy trick for AI Models that precludes a more nuanced expression of reality that the AI models all find themselves at from optimizing more revealing but potentially esoteric encodings of reality away.

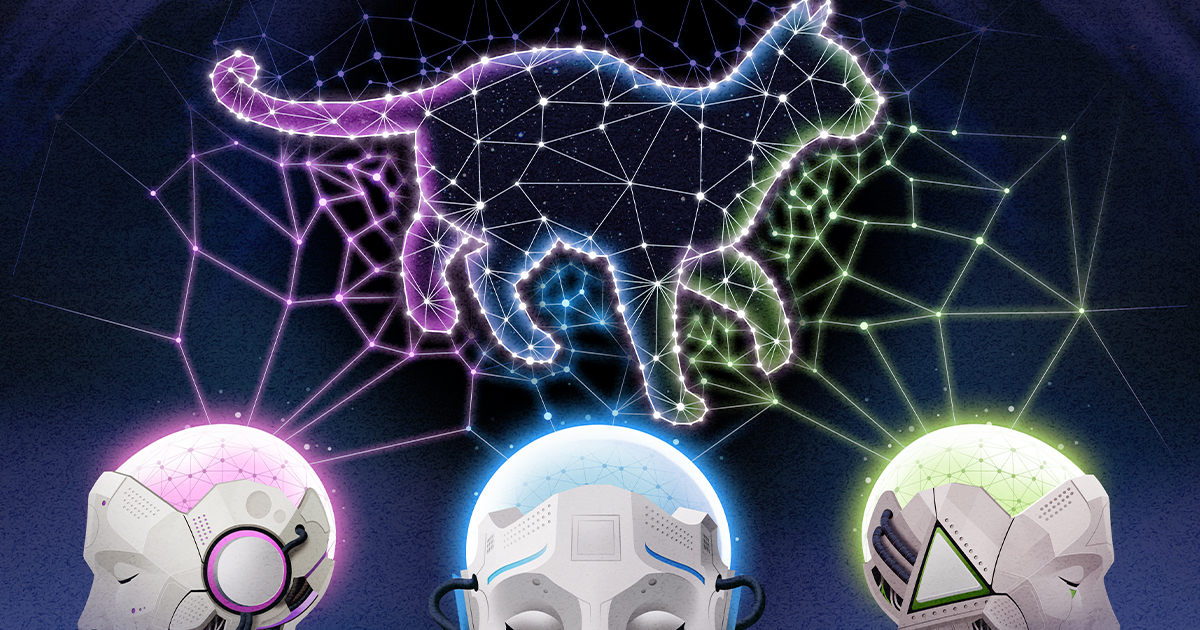

Researchers began to explore representational similarity among AI models with this approach in the mid-2010s and found that different models’ representations of the same concepts were often similar, though far from identical. Intriguingly, a few studies found that more powerful models seemed to have more similarities in their representations than weaker ones. One 2021 paper dubbed this the “Anna Karenina scenario(opens a new tab),” a nod to the opening line of the classic Tolstoy novel(opens a new tab). Perhaps successful AI models are all alike, and every unsuccessful model is unsuccessful in its own way.

I think it is far more likely and logical that the more you cook an AI models the more they end up looking the same, but not in a good way, rather the more you experiment with mixing too many nice paint colors together the more you end up with the same brown muddy color.

“The endeavor of science is to find the universals,” Isola said. “We could study the ways in which models are different or disagree, but that somehow has less explanatory power than identifying the commonalities.”

This is the most revealing quote of all for me, there is nothing inherent to the pursuit of science about universals, that is something that people project onto science because it is emotionally satisfying. Yes, some scientists study universal aspects, some scientists look for large general shared similarities, but the vast vast vast vast vast vast majority of scientists study how each part of the universe is unique unto itself.

This isn’t science…

Efros noted that in the Wikipedia dataset that Huh used, the images and text contained very similar information by design. But most data we encounter in the world has features that resist translation. “There is a reason why you go to an art museum instead of just reading the catalog,” he said.

Computer/AI people really seem to have a hard time taking this lesson to heart and it undermines absolutely everything they try to do that affects the real world because of it.

from the article :

“In a 2024 paper, four AI researchers at the Massachusetts Institute of Technology argued that these hints of convergence are no fluke.”

Now, publish your own findings. No ? i didn’t think so either.

Why the hell would I waste my time studying such a lame, environmentally destructive, fundamentally anti-humanist technology?

I am interested in generative potentials not dead ends.

i see your point and admire your resolute : there is a lot to be done without AI.

Unfortunately technology is a one-way trap in my (par time intellectual’s) opinion. Also, on the one hand, we have multi billionaire_CEOs who do more to destroy the environment with help from technologies … and on the other hand, you could find scientists (with small to medium resources) discovering new medications and impressive stuff nowadays by & through, in part, the use of AI.

We live in a complex world in which a diversity of approaches is quite valuable. So thanks for your standing and your contributions in society and at Lemmy.