Gaywallet (they/it)

I’m gay

- 54 Posts

- 204 Comments

14·10 days ago

14·10 days ago

3·28 days ago

3·28 days agoIf you wish to discuss the controversy, feel free to make a post or link to an article. I’m personally not interested in hosting a link to these weirdos.

11·1 month ago

11·1 month agoI find NFC stickers often require an annoyingly close connection (unless it’s a rather large antenna) and can be particularly finicky with certain cases and other attachments people put on phones. Realistically they both take approximately the same amount of time and it’s way cheaper to print a tag than it is to buy a single NFC sticker

5·1 month ago

5·1 month agoYou’re welcome to have your own beliefs.

You are not, however, welcome to use those beliefs to invalidate someone else’s lived experience.

24·1 month ago

24·1 month agoMy fav application is scanning with a phone to immediately get on wifi

1·1 month ago

1·1 month agoStarted and finished 1000xResist over the course of a few days. In general I often find myself turned off by games with aging graphics, not for any good reason but more that I just find less of a pull towards them. I have more trouble being engaged or immersed, unless there’s a really strong art focus. This is one such game that I was worried I wouldn’t get pulled into, and in fact one that sat on a list of “maybe I’ll pick it up” because it was so highly reviewed but I was worried about that facet. It did not take very long for the game to grip me, however, because of it’s excellent storytelling. In fact, the game is almost entirely about storytelling, so there’s not a ton that I can share other than to say that it deals with a lot of difficult themes like intense trauma, bullying, having a tough childhood, extreme ideologies, and the long term effects of violence. It also deals with more societal and human issues like protests, fascism, extreme duress, how self-interested and powerful individuals can cause serious problems and inflict violence, being optimistic or nihilistic in the face of overwhelming odds, and the threat of extinction.

While it isn’t a very long game, consisting of maybe a dozen hours of gameplay, I found myself putting it down for a while after certain chapters in order to process what just happened. The story throws a lot of curveballs and reveals information that can easily change the way you frame entire chapters of the story from earlier, but it never feels like it’s done in a way that inspires whiplash - nothing ever feels like a ‘sudden’ realization and I’m honestly not sure how much of it can be attributed to such a difficult story (if everything is fucked, what’s one more thing?) and how much is because they do a masterful job at slowly unraveling the enigma of the story that very few pieces of information ever really feel out of place. There’s unfortunately only so much I can write without spoiling the story, but I will say that it was one of the best stories I’ve heard or played through and I’d thoroughly recommend it to anyone who likes a good story or wants to explore the themes I’ve mentioned above. Also, if anyone else out there played through this, I’d love to hear your thoughts on the story… what did you think? Do you have any lingering questions left over? Were there parts of the story that irked you or that you found particularly moving?

3·1 month ago

3·1 month agoI suppose to wrap up my whole message in one closing statement : people who deny systematic inequality are braindead and for whatever reason, they were on my mind while reading this article.

In my mind, this is the whole purpose of regulation. A strong governing body can put in restrictions to ensure people follow the relevant standards. Environmental protection agencies, for example, help ensure that people who understand waste are involved in corporate production processes. Regulation around AI implementation and transparency could enforce that people think about these or that it at the very least goes through a proper review process. Think international review boards for academic studies, but applied to the implementation or design of AI.

I’ll be curious what they find out about removing these biases, how do we even define a racist-less model? We have nothing to compare it to

AI ethics is a field which very much exists- there are plenty of ways to measure and define how racist or biased a model is. The comparison groups are typically other demographics… such as in this article, where they compare AAE to standard English.

14·1 month ago

14·1 month agoWhile it may be obvious to you, most people don’t have the data literacy to understand this, let alone use this information to decide where it can/should be implemented and how to counteract the baked in bias. Unfortunately, as is mentioned in the article, people believe the problem is going away when it is not.

34·1 month ago

34·1 month agoJust because there’s no ethical consumption under capitalism doesn’t mean that we have zero control over what we consume. It’s perfectly fine to hold a viewpoint of trying to minimize harm where you can and when you’re aware of it. Where you draw your lines doesn’t have to be perfect either (after all, we’re human).

28·1 month ago

28·1 month agowow what a thoughtful reply thank you, you really read that article and brought a lot of good points to the table for us to discuss

8·1 month ago

8·1 month agoI do want to point out that social media use may be one of the first of these ‘evils’ to meet actual statistical significance on a large scale. I’ve seen meta-analyses which show an overall positive association with negative outcomes, as well as criticisms and no correlation found, but the sum of those (a meta-analyses of meta-analyses) shows a small positive association with “loneliness, self-esteem, life satisfaction, or self-reported depression, and somewhat stronger links to a thin body ideal and higher social capital.”

I do think this is generally a public health reflection though, in the same way that TV and video games can be public health problems - moderation and healthy interaction/use of course being the important part here. If you spend all day playing video games, your physical health might suffer, but it can be offset by playing games which keep you active or can be offset by doing physical activity. I believe the same can be true of social media, but is a much more complex subject. Managing mental health is a combination of many factors - for some it may simply be about framing how they interact with the platform. For others it may be about limiting screen time. Some individuals may find spending more time with friends off the platform to be enriching.

It’s a complicated subject, as all of the other ‘evils’ have always been, but it is an interesting one because it is one of the first I’ve personally seen where even kids are self-recognizing the harm social media has brought to them. Not only did they invent slang to create social pressures against being constantly online, but they have also started to self-organize and interact with government and local authority (school boards, etc.) to tackle the problem. This kind of self-awareness combined with action being taken at such a young age on this kind of scale is unique to social media - the kids who were watching a bunch of TV and playing video games didn’t start organizing about the harms of it, the harms were a narrative created solely by concerned parents.

9·1 month ago

9·1 month agoprobably not, in the same way that your grandma calls a video chat a facetime or your representative might call the internet a series of tubes

AI is the default word for any kind of machine magic now

1·1 month ago

1·1 month agoAbsolutely, thank you!

1·1 month ago

1·1 month agoThe pronouns are right there, in the display name

. I’m confused, do they not show up for you? You’re on our instance so I’m guessing it’s not a front-end difference, but maybe you’re browsing on an app that doesn’t show it appropriately? Although I would mention their username itself includes the words “IsTrans” and is sourced from lemmy.blahaj.zone so those should be other key indicators.

. I’m confused, do they not show up for you? You’re on our instance so I’m guessing it’s not a front-end difference, but maybe you’re browsing on an app that doesn’t show it appropriately? Although I would mention their username itself includes the words “IsTrans” and is sourced from lemmy.blahaj.zone so those should be other key indicators.I was hardly about to ban you over a small mistake. The only reason I even replied to this, is that multiple people reported it and Emily herself came in and corrected you. The action was more about signaling to others that this is a safe space.

24·2 months ago

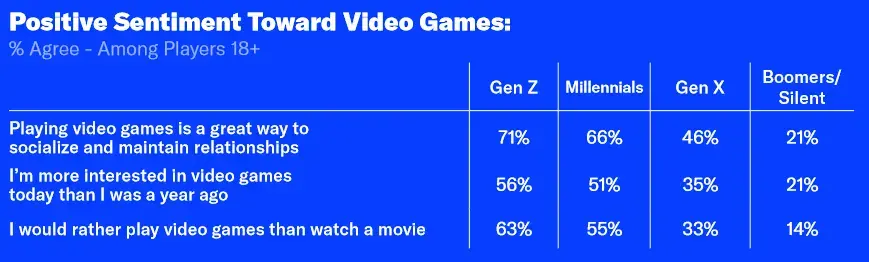

24·2 months agoFor those that are curious, here’s the exact questions used and the %s by demographic

Generally speaking I’d also fall into the rather play games category, but it really depends on the context. Unfortunately there aren’t too many couch co-op kind of games anymore so if the goal is to spend time with someone playing a video game doesn’t often work great.

1·2 months ago

1·2 months agooof, big flaw there

6·2 months ago

6·2 months agoAny information humanity has ever preserved in any format is worthless

It’s like this person only just discovered science, lol. Has this person never realized that bias is a thing? There’s a reason we learn to cite our sources, because people need the context of what bias is being shown. Entire civilizations have been erased by people who conquered them, do you really think they didn’t re-write the history of who these people are? Has this person never followed scientific advancement, where people test and validate that results can be reproduced?

Humans are absolutely gonna human. The author is right to realize that a single source holds a lot less factual accuracy than many sources, but it’s catastrophizing to call it worthless and it ignores how additional information can add to or detract from a particular claim- so long as we examine the biases present in the creation of said information resources.

5·2 months ago

5·2 months agoCheers for this, found two games that seem interesting that I never heard about before!

5·2 months ago

5·2 months agoThis isn’t just about GPT, of note in the article, one example:

The AI assistant conducted a Breast Imaging Reporting and Data System (BI-RADS) assessment on each scan. Researchers knew beforehand which mammograms had cancer but set up the AI to provide an incorrect answer for a subset of the scans. When the AI provided an incorrect result, researchers found inexperienced and moderately experienced radiologists dropped their cancer-detecting accuracy from around 80% to about 22%. Very experienced radiologists’ accuracy dropped from nearly 80% to 45%.

In this case, researchers manually spoiled the results of a non-generative AI designed to highlight areas of interest. Being presented with incorrect information reduced the accuracy of the radiologist. This kind of bias/issue is important to highlight and is of critical importance when we talk about when and how to ethically introduce any form of computerized assistance in healthcare.