sounds nice, I’ll believe it when I see it

yeah i mean, theres no way lol. even if the tech gets here that quickly there’s 0% chance prices come down significantly on lower capacity drives. these’ll be at least $500 and possibly far far more

20 TB at that price range could brankrupt some small cloud providers. Selfhosting would be much easier without having to worry about space. IF the price stays the same, but we’ll see.

I don’t think the vast majority use cloud storage because it’s cheap. The vast majority use it because they are unwilling or unable to setup their own.

Hosting an Internet facing service out of your own house requires constant maintenance for security.

I self host, and drop encrypted backups onto a cloud storage provider. If anything, cheap storage is going to cost me more because I’ll be inclined to back more up.

Damn, you’re right! That cheap SSD could bankrupt us! 🙈😆

Right. And if you want to self host with some geographic redundancy, it requires having friends or family with a good Internet connection who are willing to let you have a server at their place. Not impossible, but can be annoying.

I’m setting up a raspberry pi+HDD at family’s house, with wireguard to my home network. Fun stuff, but it’s not an off-the-shelf solution, especially when you consider that it’s not my Internet access, it’s theirs, so trying to be polite with bandwidth/data caps means it’s a bit kneecapped.

Yeah, there are a lot of security layers checks that big providers handle that most beginners users don’t even know exist.

I can’t imagine the damage of someone breaching through your server and reaching your personal network.

Also an extra machine, if you plan to have 99% uptime.

I’d be interested what the wear-leveling and write-cycles look like. $250 for 20TB is half the current price of decent spinning rust, but if they’ll die in a year because they’re part of a Ceph cluster or ZFS array, that’s gonna be a no from me, dawg.

My bulk media is practically WORM anyway. BRING IT ON!

$250 for 20TB is half the current price of decent spinning rust

No? Like, not at all.

WD Red Pro 20TB = $420 MSRP, $380 cheapest I’ve found. Not considering taxes/shipping in that

So, you’re splitting hairs by saying that’s not half. Point stands

16-18 TB HDD have been at that price for like 5 years, it doesn’t mean most people buy them

Do I need a 20TB boot drive? No. Do I want it enough to pay $250? Yes, absolutely. I’m running 1TB now and I need to manage my space far more often than I’d like, despite the fact that I keep my multimedia on external mass storage. Also, sometimes the performance of that external HD really is a hindrance. I’d love to just have (almost) everything on my primary volume and never worry about it.

It’s kind of weird how I have less internal storage today than I did 15 years ago. I mean, it’s like 50 times faster, but still.

I’m not super-skeptical about the pricing. This stuff can’t stay expensive forever, and 2027 is still a ways off.

Honestly, if they could just get 8tb ssds down to $200-250 I’d be happy with sata interfaces

I get a sense that the tipping point for the final HDD to SSD transition will be soon. A lot of people are willing to spend a little more for SSD when the capacity-to-price is almost as good as HDD. I think this will first spike demand followed by spiked production followed by a significant drop in price after production ramps up (as long as the companies avoid any economic funny business).

I build my NAS years ago and the drives are still doing fine. I can’t wait though to upgrade that thing to SSDs. This thing is loud and sucks a lot of power right now.

Nah there are still use cases where longevity is most important. You can’t set an SSD in a closet for 20 years and expect to still have your data. HDDs also have longer active life expectancies AIUI.

Totally, there’s still a use for tape and optical, too. But SSD will be the dominant media soon.

That’s alot of… homework files.

And Linux ISOs!

It would be amazing if PCIe lanes becomes the predominant limiting factor, rather than drive cost, for building large storage arrays. What a world it would be, when even Epyc and its lanes-for-days proves to be insufficient for large

Chia minerserrPlex serversuh, Linux ISO mirrors.So is that enough for the modern warfare installer or

Maybe I should put off building a NAS for the time being.

Yeah…. I think I’ll still need to pick up some drives this year, but I might do less robust of a build out

I’ve switched over to one disk redundancy to stretch out my spinning rust. If SSDs come down just a little bit more it’ll be worth it to replace my array. Just hoping two drives doesn’t die until then.

Wow the future seems bright for my achives

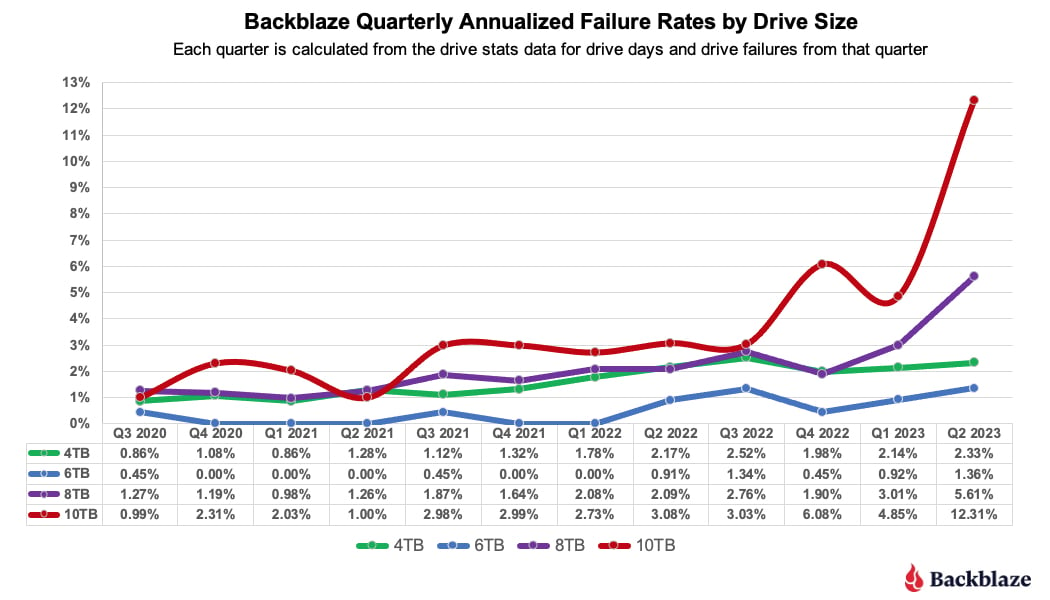

Generally the more layers you add to an SSD the less robust it is. If this is real your data will be corrupt within a week.

I mean you can say the same for spinning magnetic platters. “The more bits you’re trying to squeeze into a fixed size HDD the less robust it is.”

I’m not saying these guys can do it, but dismissing higher densities of storage out of hand seems a bit glib considering the last 60 years of progress and innovation.

They’re a flat-storager.

Should work perfectly with Flatpack apps then!

That’s never really been an issue with HDDs as far as I’m aware, although 10k rpm drives were known to be more fragile IIRC. The lower life and robustness of QLC vs SLC flash is well known.

Because drives use ECC and spare sectors to give the illusion of reliability just like QLC.

Here is reliability vs drive size.

Decades ago, a collegue of mine (who once worked in hard drive design) said, “Oh, hard drives stopped reading 1’s and 0’s years ago. Now they compute the probability that the data just read was a 1 or a 0.”

That is exactly what ppl like you said when SLC came out and TLC came out and QLC came out…

Look back now.

And they use far more energy. Meanwhile spinning disks can sit idle with all of my hoarded data.

Google says hdd idle is higher than SSD. Ssd is higher under load but it’s important to look at total energy used. If the SSD spikes high, but is 10x faster, the total energy used will be less.

The Seagate Exos in my aray consume 8-9W each on idle, and 12-13W active. I doubt that a NVME would consume that much in idle, which will be most of the time for data storage.

AFAIK power consumption increases with size on SSDs. And that’s not the case with spinning disks. That’s what I tried to point out, from the perspective of hoarding data (idle disks) bigger sizes are not something to be pursued. Then of course there’s the use case of needing a high volume fast storage (e.g. zfs cache), for which use case these are great!